You are here

repoSim

Description:

repoSim is an ns2-based simulator aimed at assisting the fine-tuning of mPlane repository performance.The overall goal would be to use simulation as a preliminary, necessary step to investigate a broad spectrum of solutions, to find candidate solutions worth implementing in real operational mPlane repositories.

Motivations

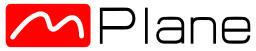

The need for such a tool can be clarified considering the following picture, that represent a general mPlane worflow, valid for both active or passive measurements. The picture show a reasoner, or intelligent user interacting with mPlane through a supervisor, triggering WP2 active/passive measurement nodes [yellow arrows], that generate a workflow that will sollicitate WP3 repositories.

Low level viewpoint

- store raw data (eg CSV, binary, …) [black]

- access raw data (eg FTP, HTTP, …) [black]

- export raw data (eg IPFIX, …) [black]

- cooking data to some extent (e.g., MapReduce, or other algorithms) [red]

- generate results and events (i.e., outcome of the above) [black]

- state all the above (i.e., capability) [blue]

To simplify, we see that WP3 large-scale data analysis involves several types of concurrent data flows, that are either confined within the Repository itself, or cross its interface toward other parts of mPlane infrastructure (or external networks). From the architectural viewpoint, it implies: firstly, multiple tools may possibly share the same repository; secondly, even for a single tool, its control and data workflows are intermingled. Our network resource is multiplexed by different flows of type, size, and load, both within and enter/exit the repository infrastructure.

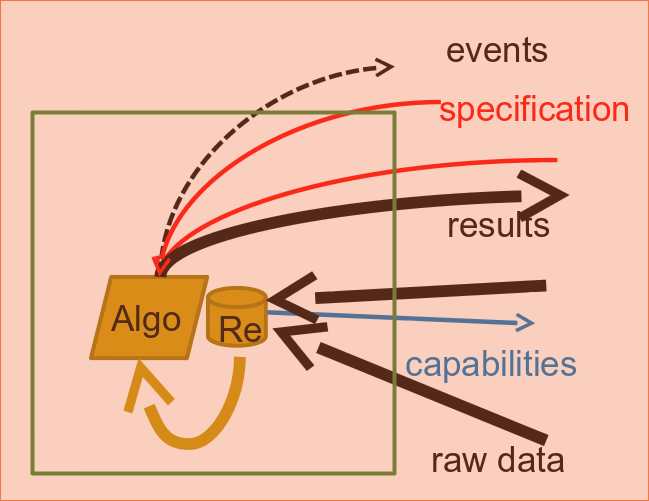

High level viewpoint

We are now study and optimize the repo "data center" network performance. For the sake of readability and generality, we argue that it is possible to simplify the above mPlane-centric view to get broadly applicable insights, that also apply to mPlane, by cutting some high frequency details that just add noise in the picture. The simplification comes into considering that there are basically two classes of flows: short or "mice" flows (e.g., events, specification, capabilities) vs fat data or "elephant" transfers (e.g., results, indirect exports, map, etc.)

Under this light, an important observation is that unless proper actions are taken, competing elephant flows would slow down the performance of mice flows, which results in a downgrade of overall Repository/mPlane performance. We are investigating into the design of scheduling protocols to mainly satisfy:

- Sustained throughput to avoid slowdown of data cooking (e.g. elephant MapReduce data transfer in a map phase)

- Low-delay communication for short transactions (e.g. mice control flows)

Quick start:

The installation instruction are detailed in the D33 tarball.

mPlane proxy interface

none